Detecting fake news in the age of Machine Learning

An interview with Trifork’s Halleh Khoshnevis.

During the 2016 U.S presidential elections, we got confronted with a wave of fake news websites, blogs and digital accounts designed to support targeted opinions and to generate clickbait traffic.

The influence of these sites on the election was covered extensively by credible news organisations with a high-level coverage. They were concerned about the dangers of fake news and their potential association with fake news sites.

Their concern grew stronger as President Trump used Twitter (and continues to this day) to ridicule mainstream media further through labels such as “fake news” and “fake media.” These labels were perceived by many credible journalists as Trump’s attempt to distract the public from media reports – since most of them have been critical of Trump’s presidency – in order for Trump to position himself as the only reliable source of truth.

Nonetheless, this is not the first time that ‘’fake news’’ has polarised the general public and threatened democracy. Plenty similar stories have occurred through the years, making one wonder how it is possible that we still have fake news appearing in the media and can’t fully track and recognise it today.

Fake news won’t just disappear in the hush of a night. Especially now with all social channels where a fake account, blog or website is created as fast and easy as today, allowing anyone to have fun, get paid or even have substantial influence on public opinion by spreading lies.

We have reached a point where people question almost all messages presented to them by media.

Of course, people are working on solutions and they are using all kind of means, including technology. It is also here, in detecting fake news, where technologies like Machine Learning and Artificial Intelligence prove their value.

Trifork’s Machine Learning engineer Halleh Khoshnevis is one of many professionals working on fake news detection. Trained as a computer scientist during her B.Sc, Halleh found early great opportunities to work with algorithms and optimization problems. With a focus on Artificial Intelligence and passion towards pattern recognition and Machine Learning, she found herself speaking last November at the European Women in Tech 2018 conference. I took some time of her busy schedule to discuss her approach and decision to work on fake news detection.

Why did you decide to work on fake news detection?

As you know fake news is a very hot topic right now. Everyone talks about it today, starting from the 2016 US Elections and the way president Trump used this term.

Information, freedom of speech and expression, have all been at the core of democracy since its birth. Conversely, the same ideas are threatened and misinterpreted today and this is why I decided to work on fake news detection. I totally believe in Machine Learning and especially Natural Language Processing as the best way to detect fake news.

Why do you think that fake news detection systems are relevant today?

I believe these systems are important first of all to global societies and second to news/media companies. Both printed media and their modern counterparts carry the responsibility of sharing the truth with the public. As they follow this principle, it is essential they detect fake news before using it for (background) information or it even distributing it on their platforms and channels.

Additionally, an important part of the public has already lost its trust in media today, showing an aversion against everything that they can’t immediately confirm as real or fake.

For a media organisation the identification of a source as fake news is so far a very time-consuming process that asks for intelligence and ofte a lot of time.

However, Machine Learning and the approach that I have been developing at Trifork, can turn the aforementioned discovery process into an easier and faster process, involving less effort and more accuracy, dealing with fake news detection.

Can you provide us with an overview of your approach?

The traditional Linguistic Literature method was my starting point. According to it, fake articles are detected based on irregularities found into the fundamental structure of a sentence as extracting information out of it. One way to do this is through the Centering Resonance Analysis.

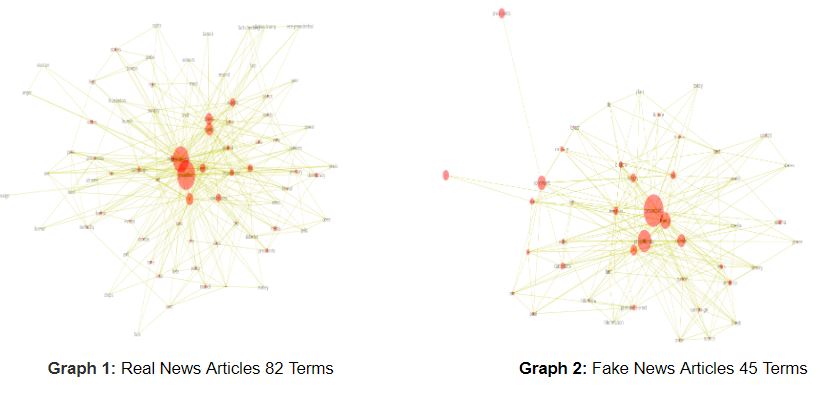

In Centering Resonance Analysis we assume that the most important part of a sentence is its nouns. Thus, by extracting all the nouns out of it, we create a graph/network of all the nouns in all the documents that we have. A graph of nouns gathered from real stories (Graph 1) and one from fake (Graph 2) can look as below:

We observe that the topology of both graphs is different. For real news articles Graph 1 we clearly identify a broader, richer in content and context and easily relatable graph. On the contrary Graph 2 for fake stories, is narrower in topics and nouns all with a negative prism.

With 3000 fake and 3000 real stories analysed as explained earlier, I moved on by collecting the title, text and label of each of the articles used, in addition to checking their source.

Then, I went through the Machine Learning pipeline consisted of the stages of Preprocessing, Feature Extraction, Train Classifier and Testing. An ML pipeline works as a loop going back and forth, where you can make continuous changes in your data to enrich your final results.

In the Preprocessing phase I had to ‘’clean’’ my data of all unnecessary information which had the potential of reducing accuracy on my final results, erasing the so called ‘’noise’. Once it was gone, I extracted all important information and enriched my data further.

When my data was finally ready I applied classifiers to train it, making a model able to work also on different data. It is all about testing, enriching your data and testing again until you reach a higher level of accuracy on your final results.

From the data I had, I used 80% to create the different models. The 20% I had left I used to test the model on articles that were not in the database I used to create the model

My overall results in fake news /real news detection were:

- Decision Tree : Accuracy 79.9%

- Multinomial Naive Bayes’: Accuracy 87.5%

- Linear SVM: Accuracy 92.3%

Of course this is not yet a 100% score. However, I expect to improve the model and already these results can help a lot in recognising suspicious articles and saving time in the identification of fake news.

Did you face any struggles during the development process?

I am glad you ask this question. As you know Machine Learning is all about data. In order to work on my process I had to use datasets, which should have already been audited as real or fake.

This is still a manual process with people going through articles, blogs etc, fact checking and labelling them accordingly. Consequently, there is a certain level of subjectivity to it. To my surprise – based on some articles I read – many people are faced with depression during the auditing process, while reviewing stories on unhappy topics, unable to decide if the story is real or fake after all.

Finding a good dataset to run my tests was definitely the biggest challenge that I faced and one that other colleagues are also faced with on a daily basis.

You spoke at European Women in Tech 2018. How was your experience?

The invitation to speak at this event came out of nowhere really. I was contacted by someone in the UK who informed me about the conference and asked if I was interested to speak. It is definitely an important initiative highlighting that women are welcome to join any field they want as long as they are passionate about it. Also, it invites employers to hire more females by increasing demand in fields like science and engineering.

My overall experience was great, with around 3.000 people around and many speakers and attendees coming from Iran my home country, it was truly special. Nevertheless, despite how interesting and important these events are, I totally believe that the change we all seek in this world comes first from within us.

‘’Nobody can stop you from achieving your goals no matter your gender.

If there is any limitation it is only the individual limitation ’’

Which was Trifork’s contribution in the whole process?

It all starts with Trifork’s motto:

Based on Machine Learning hackathons and workshops I attended with Trifork’s Machine Learning team, I was able to get inspired for this project. I started to think: What is a real business problem out there that we can solve?

This is how I came up with this topic and decided to work on it along with my colleagues at Trifork. I had all time and support from them to work on this idea, getting high level feedback and insights from my colleagues.

Future expectations on your project? Ideas for development?

Recent studies have shown that producers of fake news tend to use high level of vocabulary and grammar. This is their way to make it look as real as possible. However, at present, it brings them the opposite results. It works as a proof of it being fake by having way more complicated grammar/vocabulary than real news. In that way, if we can detect this complexity in the structure of a sentence, we can use it as an added feature to our process, for better results.

Further, we can take into account the ORS (Online Relevance Score) out of a search engine search. While searching for a specific topic we can observe if the first 10 results of our search are related to what we are searching for. If the relevance is high, currently, it means most of the time that this topic is real and has been discussed and written about by many already. The opposite can be an evidence of fake news.

Lastly, by checking the author’s credibility with a content network analysis, we can add yet another layer to our process. Content Network Analysis is a cross check between the author and his network. If the author is not well known but is connected to credible and established authors, then it is more likely that he isn’t producer of fake news. If instead he is only connected to biased authors, then possibly he his articles need double checking.

I would like to ask for anyone interested in this area to attend my presentation at the Codemotion conference in Amsterdam coming April. I will be bringing an upgraded version of my model up for discussion.

All in all, this project has opened up to me a new field that I hadn’t worked in before, and I am very glad both to explore it and exchange knowledge with other colleagues along the way. At Trifork we have been working with Machine Learning and Artificial Intelligence for some time. Because of this, we have put together several standards or structures how we put together what we offer. If you are interested, invite you to explore the Trifork Agile approach to Machine Learning here

Trifork

Read what else Trifork is doing with Machine Learning on our website:

https://trifork.com/machine-learning/