Going Cuckoo over Bloom filters

Sometimes you encounter a situation where you would like to do a simple check to see whether something is needed before you get started. This was the case recently on a project where we asked various questions to several internal services, based on a possible subscription. Ideally, we would rather not do that if we already know in advance that someone does not have a subscription.

Sure, you can make a call to the database to see if certain records exist or not, but that remains a call to a database and they are not necessarily cheap. Certainly not if you can then go back to retrieve the details if it turns out that the person is known to have a subscription. An additional and complicating factor for us was that we have a somewhat unbalanced usage pattern, to say the least. During longer periods it can be quite slowgoing and sometimes it takes less than 5 minutes to go from processing virtually no calls to many thousands of calls per minute. Then you prefer to cut off what is not necessary as early as possible in the flow.

Bloom filter

Instead of another heavier database server and even more service instances to handle the traffic, this time we looked at alternative ways to determine whether someone belongs to something or not. We ended up with Bloom filters. This technique was originally invented in 1970 by Burton Howard Bloom and is a variant of a technique called probabilistic data structures. These build on set theory and can indicate whether something belongs to a set with a high probability, or whether it is guaranteed it does not.

In short, it works as follows. You take an identifier of the object that you want to know whether it is part of the set or not. You generate several hashes from this, which then go into a data structure that behaves as a bit vector (and often is). If you then receive a request with that identifier, you can generate the same hashes and if the result is in the Bloom filter, you have a high degree of certainty that you already have that identifier in your set, and therefore the associated entity is known.

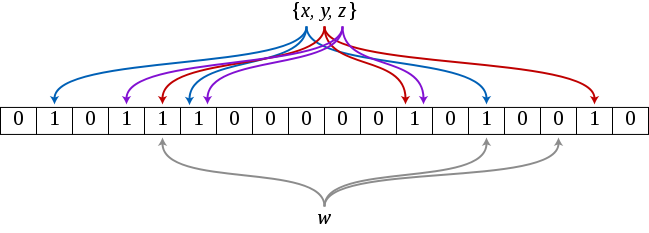

This is what it looks like when you add x, y, and z to the filter and then check if w is in the set as well.

The hash values put a 1 in the places that match for x,y, and z. Since one of the positions for w returns a 0, that means that w is not in the set.

You can influence the degree of certainty by using a larger (or smaller) vector and by using more (or fewer) hash functions. This is of course at the expense of a little more memory space (and computation time), but it is still many times smaller than caching the key or the associated object itself. As a rule of thumb, you can use ±10 bits per key when using the classic Bloom filter. Some variations on the classic algorithm overcome certain negative properties, but often at the expense of space requirements.

An implementation with Guava

Of course, you don’t have to do all those calculations yourself. You can leave that to a library that implements this for you. A well-known example in Java is the Google Guava library. Creating a bloom filter is as simple as

BloomFilter<Integer> bloomFilter = BloomFilter.create(Funnels.integerFunnel(), 100);

This uses a default of 3% for the chance of false positives. An important point is to properly estimate your expected data set. In this case, I have set it to 100, but if you go well over that, the chance of a false positive goes way over the configured 3%.

If you want a lower chance, you can also specify that. For example, you get 1% like this:

BloomFilter.create(Funnels.integerFunnel(), 100, 0.01);

To make it more concrete, we can use an example analogous to the project why I looked into this in the first place. Suppose we have a system that manages customers, their profiles, subscriptions, payments and shipments. This involves all kinds of management functionalities and some of them require you to submit queries to various external services. To prevent us from going there when the customer is unknown, we can use a Bloom filter to keep track of whether we probably already know that customer or not. Something like the following:

@RestController

public class CustomerController {

private final BloomFilter<String> customerBloomFilter =

BloomFilter.create(Funnels.stringFunnel(StandardCharsets.UTF_8), 1_000_000, 0.02);

@GetMapping

public ResponseEntity<Customer> getCustomer(String id) {

if (customerBloomFilter.mightContain(id)) {

return ResponseEntity.ok(customerService.findCustomerData(id));

} else {

return ResponseEntity.notFound().build();

}

}

@PutMapping

public void addCustomer(String id, String name) {

// do stuff...

customerBloomFilter.put(id);

}

}

In itself, you have seen the basic functionality of Bloom filters. However, there are still a few things that remain a challenge.

Initialization of your filter

First of all, how to initialize your Bloom filter. Since this is in-memory storage, it will return empty after restarting your application. This then becomes difficult when retrieving details for existing customers. You can choose to solve this when you start your application by streaming the list of customers and adding all IDs to the filter. Does that work? Yes, but it’s not ideal.

If you use Guava, you can also choose to write the state of the filter to persistent storage periodically or upon shutdown via filter.writeTo(outputStream) and then read it again at startup via filter.readFrom(inputStream, Funnels.stringFunnel(StandardCharsets.UTF_8)).

Can you also delete identifiers?

If you have a use case that includes a bit more volatility in the dataset, you may also want to remove data from your Bloom filter. Unfortunately, that is not possible. Not because the implementations do not support that, but because the concept of a Bloom filter does not work that way.

You could then choose to periodically reset your filter by reloading the data from your data store. You must ensure that you do this carefully and do not forget to include any new customers who come in during such a reset.

In addition, there are variations of the classic algorithm that offer a solution for this, but this is often at the expense of extra memory use and/or performance for calculations. If we look a little further than just Bloom filters within this category of algorithms, there is another one that can do that: Cuckoo filters.

One to consider, since in many cases they work better than standard Bloom filters. Except for one ‘small’ detail. The implementations are a lot more difficult to find than those for Bloom filters, unfortunately. The Guava library i.e. doesn’t have a Cuckoo filter implementation.

Bloom filters in a distributed world…

Finally, there is another challenge for distributed applications. Pretty standard in most cloud deployments, but also if i.e. you already run a service in high availability.

If your dataset changes even slightly over time, you will have to pass those changes on to the other service agencies. And most implementations of Bloom filters will not help you with this, you will have to work that out yourself.

One option is to use events for actions that result in a change to your set. By publishing this to all subscribing services, for example, to a topic on a messaging platform, they can adopt this change fairly quickly and you will have minimal inconsistency. However, if you do not yet have MQ or a similar service in your system, that could become a blocker. You would introduce a new dependency into your system.

Another alternative is to use Redis with the Bloom filter module. The latter contains, not surprisingly, an implementation of a Bloom filter, but also one of the Cuckoo filter. By using this, you share the filter with all connected services.

And if you also use the Redis feature server-assisted client-side cache for this object, you can get it almost as fast as if the filter were always available locally. I won’t explain how this works here in the margins. That requires a little more space ;-).

It is worth mentioning that not all Java clients for Redis support the full set of modules, but Jedis certainly does. Also, some cloud providers have hosted Redis options, but usually without the modules so you may have to set that up yourself.

Other scenarios

The above scenario is one that still requires quite a few changes to the dataset, and I haven’t even addressed the GDPR-backed right-to-be-forgotten for (former) customers yet ;-). Ideally, you use Bloom filters for something that rarely or never changes. This also makes it easier to estimate the expected number of insertions. Too high and you use more hash functions than necessary and that costs processing time. Too low and you run the risk that your false positive rate will be significantly higher than you would like.

In addition, in this scenario, I made use of a globally maintained Bloom filter. That may not always be necessary or recommended. For example, you can read a blog by Hani Suleiman about the use of Bloom filters in recommendations for Prime TV viewers. There the filter is passed via the request context. By nature, it is a filter tailored for that particular customer.

And this also shows that we don’t always have to use the latest or perfect solution to deliver something useful.

This article previously appeared (in Dutch) in Java Magazine 2023 #3 by the NLJUG