Next step in virtualization: Docker, lightweight containers

Lately, I have been experimenting with Docker, a new open source technology based on Linux containers (LXC). Docker is most easily compared to Virtual Machines (VMs). Both technologies allow you to create multiple distinct virtual environments which can be run on the same physical machine (host). Docker also shares characteristics with configuration management tools like Chef and Ansible: you can create build files (a Dockerfile) containing a few lines of script code with which an environment can be set-up easily. It’s also a deployment tool, as you can simply pull and start images (e.g. some-webapp-2.1) from a private or public repository on any machine you’d like, be it a colleagues laptop or a test or production server.

Lately, I have been experimenting with Docker, a new open source technology based on Linux containers (LXC). Docker is most easily compared to Virtual Machines (VMs). Both technologies allow you to create multiple distinct virtual environments which can be run on the same physical machine (host). Docker also shares characteristics with configuration management tools like Chef and Ansible: you can create build files (a Dockerfile) containing a few lines of script code with which an environment can be set-up easily. It’s also a deployment tool, as you can simply pull and start images (e.g. some-webapp-2.1) from a private or public repository on any machine you’d like, be it a colleagues laptop or a test or production server.

But you’re already using all those other tools, so why would you need Docker? In this blog entry, I’d like to give you an answer to that question and provide a short introduction to Docker. In my next blog entry (coming soon) I’ll dive into using Docker, specifically covering how to setup Tomcat servers.

Why use Docker?

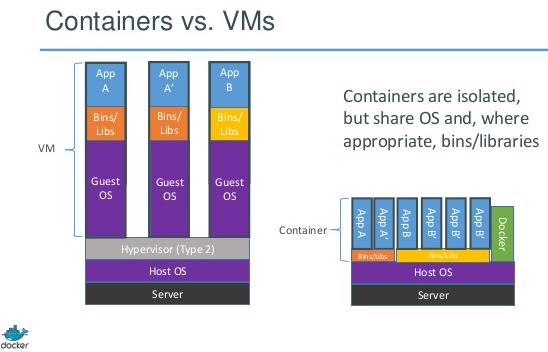

I guess efficiency is the most important reason to use Docker. Docker containers (which are instances of Docker images) run on the kernel level, which is the biggest difference with VMs. This means that the containers can share resources efficiently. Images themselves are a stack of diffs on top of a base image (such as Ubuntu 12.04), taking up no more space than they need to. Running containers built with overlapping setup share those setup resources and use more disk space only once they start altering or adding files. This is shown in below image, which I took from a presentation from the Docker website.

A difference between Docker and Chef or Ansible is that created images contain the OS separated from the host OS as well. So system upgrades will not affect your application, and it’s even easy to sync the OS state for an arbitrarily large number of interacting nodes. Note that you could use Chef cookbooks to create an image.

Docker also has great ease of use. I’m not a dev-op, so I’m interested in hearing from serious dev-ops in this respect. However, I’m impressed with the caching mechanism while building images, which makes sure that a small change in the final step is a small change indeed, not requiring long minutes of downloading the same stuff again (very different from testing Ansible scripts on a VM). I’m also keen on trying out the repository system, with its simple push and pull syntax. Managing containers is something that can be improved upon I guess, but I’ve been shown simple cleaning scripts already.

As a small disclaimer, note that Docker is still under heavy (and very active) development. While the base technology ‘Linux containers’ is some years old now (and under continuing development), Docker arrived 4 months ago. This means for instance that the latest Ubuntu LTS (12.04), while being supported, needs a kernel upgrade before it can host your Docker containers. From 12.10 the standard kernel is good enough, but different linux systems than Ubuntu (or Mint) are not supported yet.

Further resources you really can’t get around but which I’ll mention anyway are

- Official Docker website, which really became a better place with the new Docker logo. Once there, I doubt you’ll miss the documentation section 😉

- The public Docker index, where you can search for images contributed by the community.

- The Google mailing list and Stack Overflow, both also clearly mentioned on the website. Interesting to just scroll through to learn more about techniques and use cases.

In my next blog, I’ll dive into setting up Tomcat 7 + Java 7 Oracle JDK, so (and I never thought I’d say this, but…) stay tuned!